Discover our content

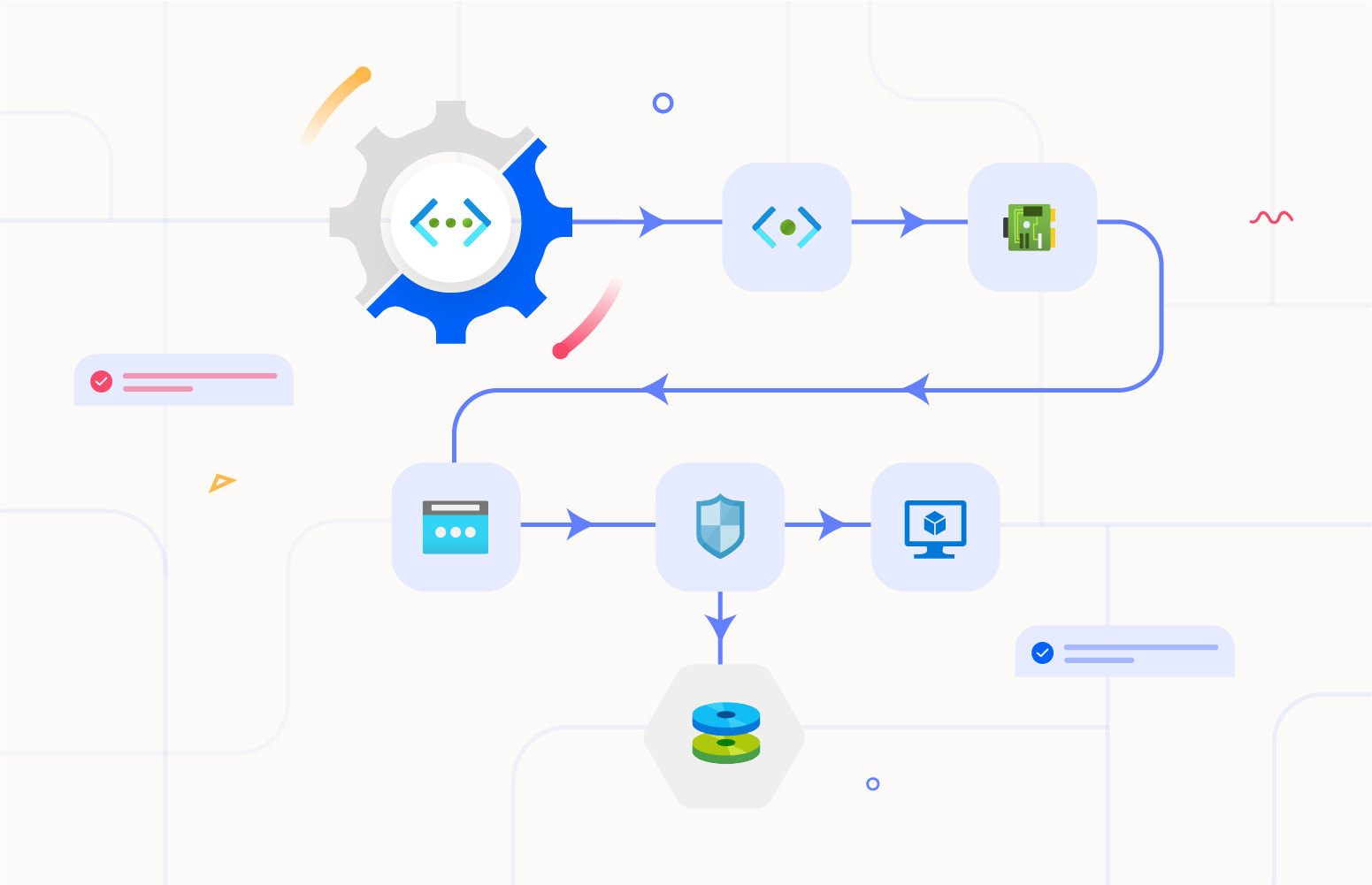

Azure network diagram generator with automation

Apr 10, 2024 • Nadeem Ahamed

Today many of your cloud solutions will be connected to a private network …

Azure Cost Analysis at the Service Name and Meter level

Apr 9, 2024 • Praveena Jayanarayanan

Azure provides a wide array of services, each with its own pricing model. …

Elevating Azure Management Solutions Together – Turbo3...

Apr 2, 2024 • Mohan Nagaraj

We are delighted to share with you an exciting development that underscores our …

Tailored Azure cost savings notification and alerts

Mar 29, 2024 • Nadeem Ahamed

When an organization attempts to reduce costs immediately, one of its go-to options …

Optimize Azure spending with Turbo360 periodic notifications

Mar 28, 2024 • Modhana

Is your Azure spend management getting out of control? You’re not alone. Countless …

Implementing Azure Cost Circuit Breakers for Budget Protection

Mar 25, 2024 • Michael Stephenson

Recently when recording an episode of the FinOps on Azure podcast with Rik …

Turbo360 and Contica AB Join Forces to Revolutionize Azure M...

Mar 22, 2024 • Mohan Nagaraj

We’re excited to share some thrilling news with our community! Turbo360 has forged …

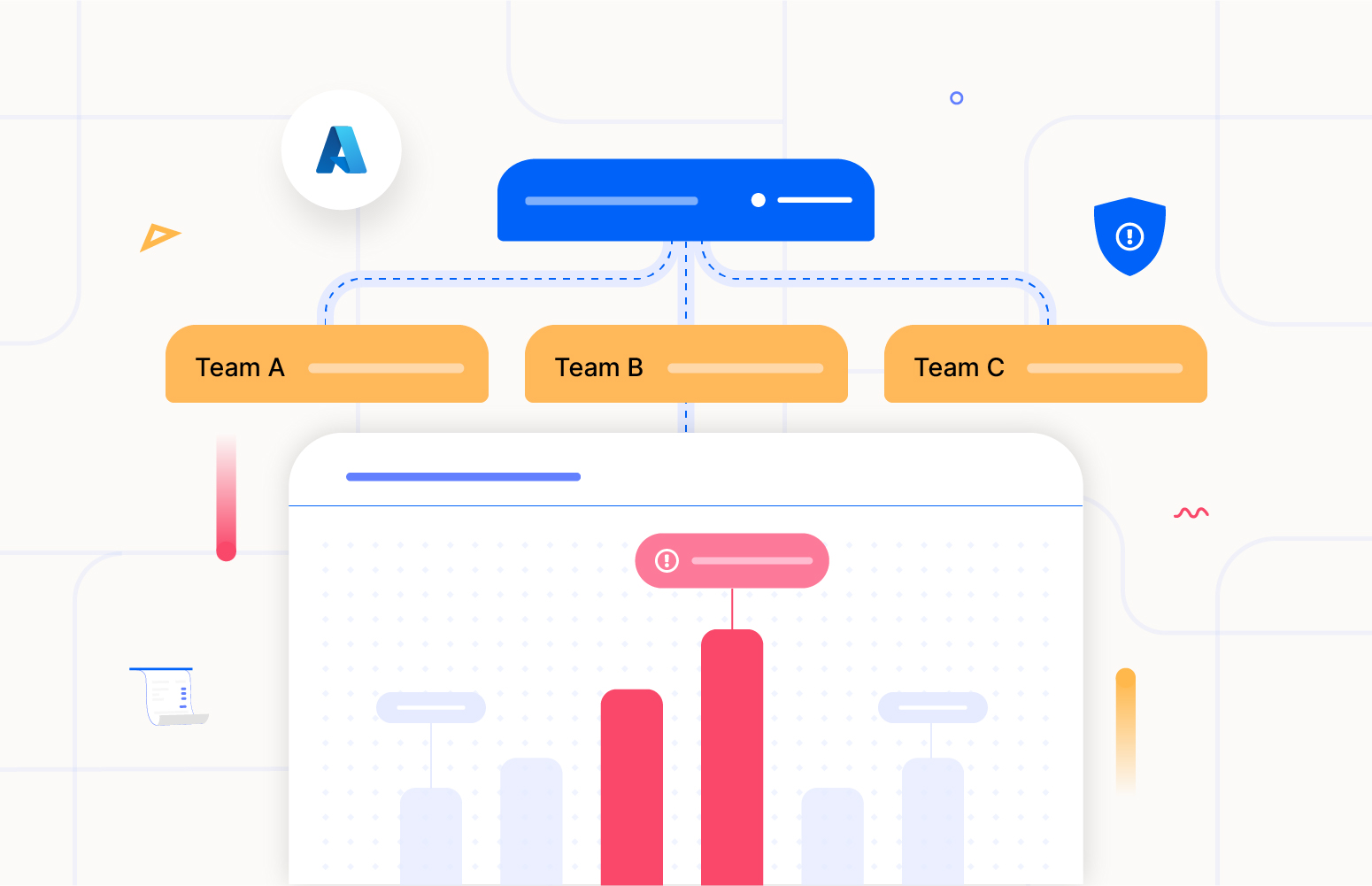

Decoding SaaS Customer Cost: A Guide to Calculating Cost per...

Mar 21, 2024 • Nadeem Ahamed

In the SaaS era, especially in the B2B segment, the business’s profitability will …

How Azure cost anomaly detection shields billing shocks

Mar 15, 2024 • Nadeem Ahamed

One of the fundamental promises of the cloud, when organizations embrace it, is …