After an exciting Day 1 at INTEGRATE 2019 with loads of valuable content from the Microsoft Product team, it was time to get started with Day 2.

Table of Contents

- 5 tips for production ready Azure Functions – Alex Karcher

- API Management: deep dive – Part 1 – Miao Jiang

- Event Grid update – Bahram Banisadr

- Hacking Logic Apps – Derek Li

- API Management: deep dive – Part 2 – Mike Budzynski

- Making Azure Integration Services Real – Matthew Farmer

- Azure Logic Apps vs Microsoft Flow, why not both? – Kent Weare

- Your Azure Serverless Management simplified using Turbo360 – Saravana Kumar

- Monitoring Cloud- and Hybrid Integration Solution Challenges – Steef-Jan Wiggers

- Modernizing Integrations – Richard Seroter

- Cloud architecture recipes for the Enterprise – Eldert Grootenboer

5 tips for production ready Azure Functions – Alex Karcher

The second day of #Integrate2019 started with a session by Alex Karcher on 5 Tips for Production ready Azure Functions.

The 5 major tips for production-ready Azure Functions presented are;

- Serverless API’s & HTTP

- Event stream processing

- EventHub scale options

- Azure DevOps CI / CD

- Monitoring & Diagnostics

Serverless API’s & HTTP

This is the most common scenario where everyone uses Azure Functions for a public facing HTTP endpoint. Starbucks and Honeywell are using this capability of Azure Functions for their HTTP Endpoints.

All these HTTP Endpoints are backed by a Public Traffic manager which is API Management which can host multiple Function API’s together. All these endpoints are deployed in various regions for faster availability. Based on the request, it will be sent to the appropriate region.

API Management also takes care of load balancing and failover and let the user know, what request is processed, and which is failed.

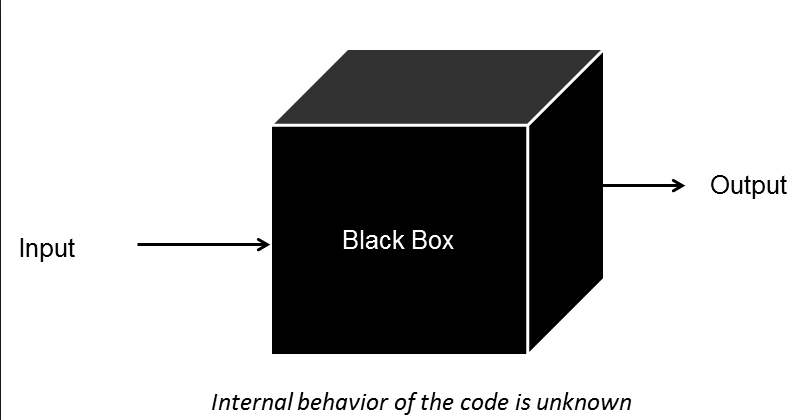

Everyone considers an API as a Blackbox which does something, which is also the same for Azure Functions as well.

Event stream processing

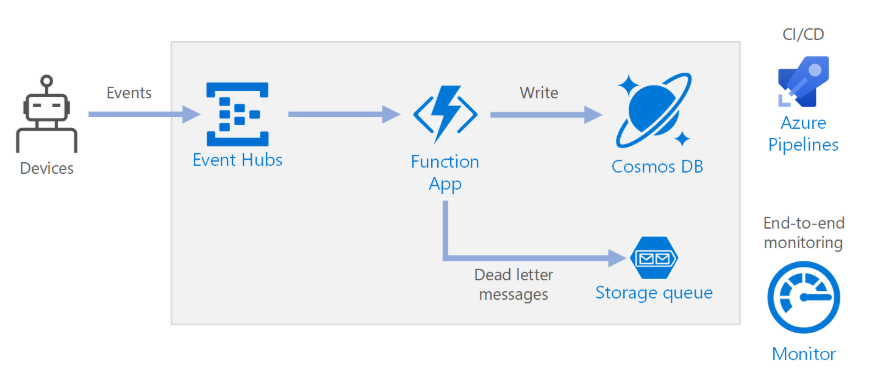

This is one of the most popular scenarios, where some messages are polled into EventHubs and a function picks it up.

The flow is essentially the same across most of the Business scenarios worldwide. Alex also mentioned that people are starting to use Cosmos DB mostly rather than any other databases.

The pool of devices may also be an IOT Hub, which can connect to an Event Hub using a generated key to send data. The key is generated when a partition lease is acquired and each Function App will have one lease on an Event Hub partition.

It is to be noted Maximum of 4 leases can be obtained.

E.g – If there are 10000 messages in an Event Hub, only 32 instances of Function App alone is sufficient to process messages. ( Depending on VM Memory, CPU ).

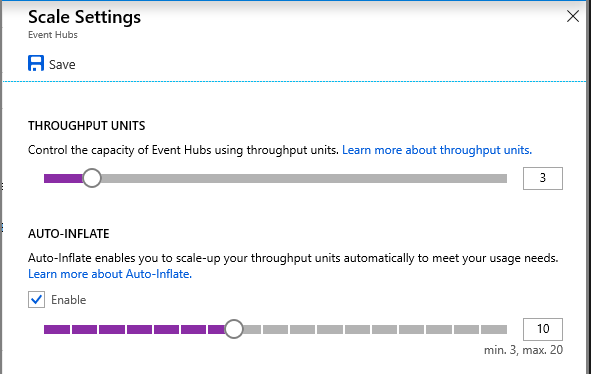

EventHub scale options

EventHub scaling options is one major problem which customer face in most places. The Azure portal provides partitions in Event Hub only till 100.

If some users need more partitions, just contact MSFT and it can be easily obtained.

Azure Function Premium Plan Benefits;

- No more cold starts.

- Pre-warmed up instances based on customization.

- A minimum of up to 6 instances can be pre-warmed up.

- 30 different Function apps can have prewarmed instances.

- Pay separately for instances that are running for a long time.

Now Alex jumps into the development tools that most people are using. Most people have strong inner loop development, i.e .. Debugging from Visual Studio/ VS Code. But what most people lack is good outer loop tools which are post-deployment items like remote debugging or deployment issues.

Azure DevOps CI / CD

At Microsoft Build, A Build Function pipeline task is unveiled. We can add PowerShell commands for deployment automation which can deploy 15 to 20 Function Apps together. All these things can be done straight from the Designer view, we don’t need to know YAML to write this.

Most companies have Stage Rollouts, which means multiple copies of the infrastructure are deployed in slots and it can be swapped based on use cases. We do need to note that stage rollouts are still in public preview.

MONITORING & DIAGNOSTICS

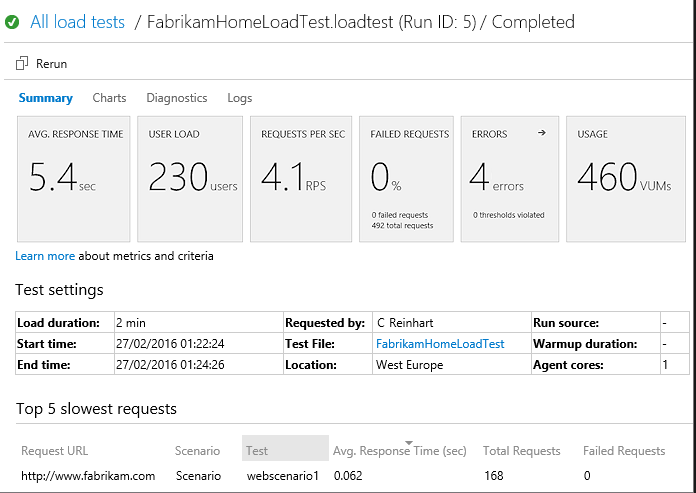

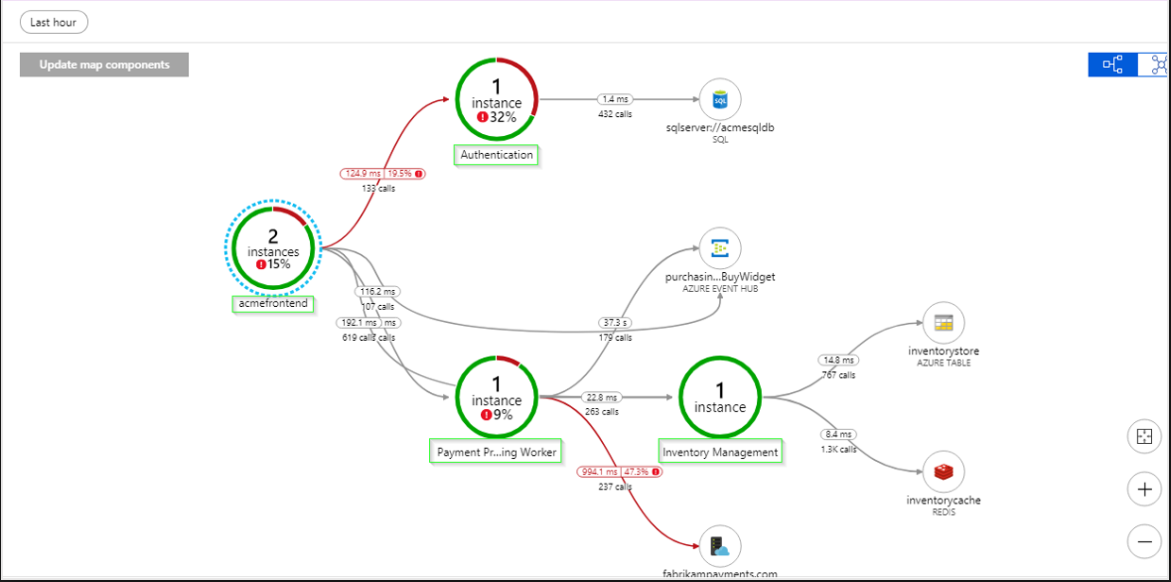

Everybody who uses Azure Functions needs to know what’s happening in their code. For that Application Insights is the most recommended tool. It provides most of the metrics for monitoring your function app.

- AppInsights can be easily integrated with Azure Function

- Real-time CPU / MEMORY / REQUESTS can be viewed

- View dependencies of the application with Application Map

- Also, trace and view the Application Map diagram which is generated Automatically based on where the request is arising from.

Now its time for some Q & A

Q: whether the Azure DevOps pipeline can be built without any yaml coding knowledge.

A: Pipelines can be built automatically using Azure CLI or Powershell or also directly from the DevOps build page.

Q: How does scaling differ from App Service & Premium plan?

A: Auto Scaling can be enabled in the App Service plan but, the premium plan has more control over scaling options.

- premium plan has a scale controller, which can be customized.

- scale trigger can be customized based on HTTP trigger, messages length, etc.

- pre-warmed instances can be specified.

- hybrid connection supports up to 20 instances

- update to this in 4 months in Global Azure Bootcamp

API Management: deep dive – Part 1 – Miao Jiang

Miao Jiang, Senior Program Manager – API Management brought in the latest updates for API Management.

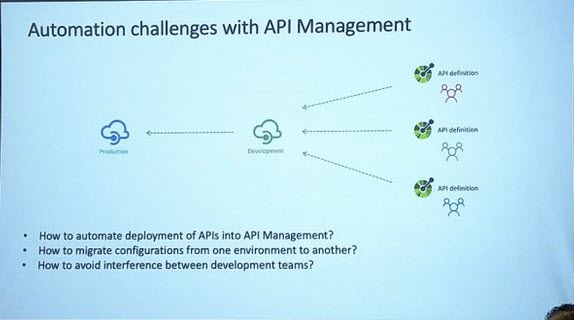

Automation challenges in API Management

-

- How to automate the deployment of API into API Management?

- How to migrate the configurations such has policies, named values from one environment to another?

- How to make sure the changes made by different teams do not interfere with each other?

Some suggested solutions to these common challenges which consist of multiple factors like;

-

- Team Structure – Single or Multiple teams

- Existing Tools – Azure Pipelines, Azure Repo, GitHub

- Suitable Deployments Options – Azure Portal, leveraging APIs, PowerShell etc.

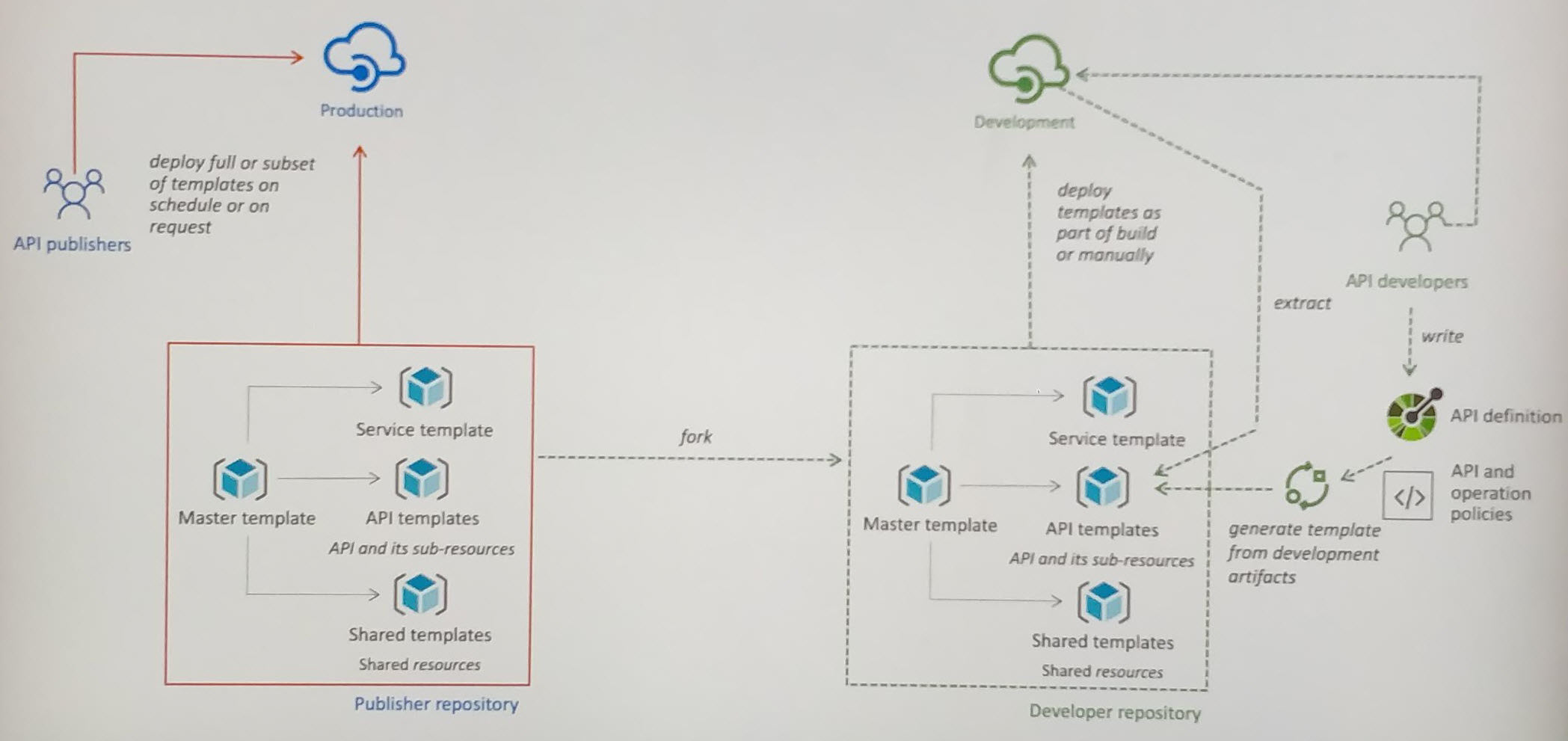

Approach to implement CI/CD pipeline for API Management using ARM Templates

This is explained with an example of an organization which has two different deployment environments like production and development. Here the production instance is managed by a central team called API Publishers. API developers have access to the development environment to test their APIs. He mentioned the challenges in deploying APIs in such an environment.

Some customer experiences about Azure Portal and to overcome the challenges were discussed.

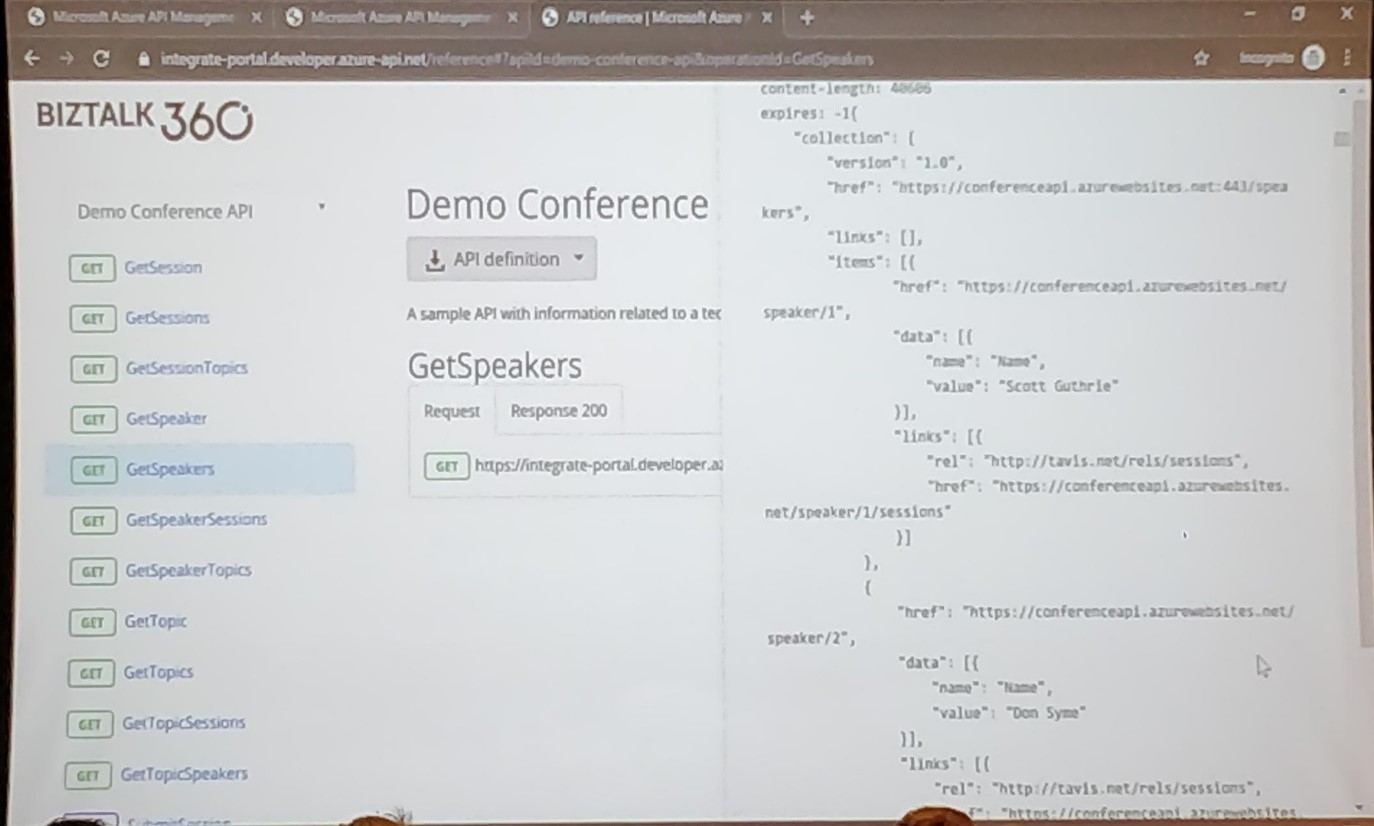

A new tool called Extractor which is capable of extracting configurations of API Management into ARM Templates so that it can be deployed in multiple environments.

To overcome challenges faced by API Developers working with open API specification and swagger files, another tool called Creator was introduced which can take open API specification and optionally with policies that need to be applied to the APIs and generate ARM Templates which can be deployed. He briefed on this approach.

Demo to build a CI/CD pipeline using Azure DevOps and Azure Automation tools

A real-time demo on the above approach using VS Code and the API Management Extensions which are in private preview. The API Management Extensions had really cool integrations and all the operations can be performed from VS code without even switching to Azure portal.

Takeaways

- Recommend using separate service instances for different environments.

- Use Developer or Consumption tier for pre-production environment.

- Template based approach is recommended due to reasons like consistency, declarative, supports partial deployments.

- Modularizing Template provides a wide degree of flexibility to deploy partially if there are multiple teams working on different APIs.

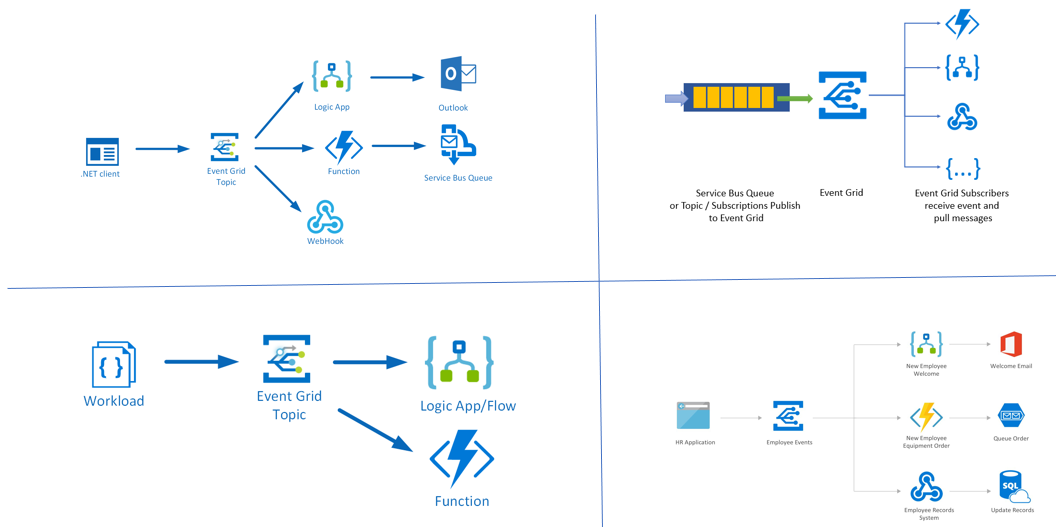

Event Grid update – Bahram Banisadr

Bahram Banisadr, Program Manager at Microsoft, working on Azure messaging team started the session with key points about Vision & Updates for Event Grids.

Why Event Grid?

The discussion started with the necessity of Event Grid;

- PubSub System

- Event Grid has Event Sources

- Put events on topics

- Don’t care about who is listening

- Event handlers (sinks) – they need to know about events – Aware of publishers

Event Grid has logs and request-response system. The aim of Event Grid is to;

- Every part of the Azure should interconnect with the Event Grid

- System topics and react Natively

- Azure maps – a new type of IOT events

- Service Bus as an event handler

What’s new?

- Service Bus as an Event handler (Preview) – Now there are two queuing systems (Storage Queue and Service Bus Queue)

- Request/Response with Scenarios – Native Service Bus integration with Azure Platform & Transaction based events

- Supports up to 1MB events (Preview)

- Send full context in the event

- Don’t perform Get after every event

- IOT Hub device telemetry events (Preview)

- Send events on a Push basis

- 500 subscriptions per IOT hub

- Date stream/Azure services

- GeoDR GA

- No additional charge, its metadata RPO

- On new entry with Event Grid, the system will replicate

- When something goes wrong, the replica will kick up and start working

- Zero minutes of topics & subscription lost

- Metadata RTO – 60 mins CRUD operations

- Data RPO – five mins Jeopardized

- DATA RTO – 60 mins for new traffic to flow

- Advanced Filters GA

- Filters on JSON payload

- Nested objects filters supported – Boolean, string, etc

- Comparison between two filters are possible

- Event Domain DA

- 100,000 topics per Event domain

- 100 Event domains per Azure subscription

What is Event Domain?

Event Domain is a management construct for topics. Bundling of topics provides the following benefits;

- Manage all topics in one place

- Bundle topics in one place

- Fine-grained authentication per topic

- Deciding who has access to which topics

- Publish all your events to one domain endpoint

- Manage events to respective topics

- Manage publishing of events

Case Study

Bahram also discussed the case study on topic – Azure service notifications. The highlights of the case study are;

- Azure resource manager

- Azure services – regional model

- Send notifications became massive when sending on a regional basis

- Domain between Azure resource manager & all domain

- Event domain manages all authentication and routing

What’s next for Event Grid?

- Remove workarounds

- Publish events to some other integration

- Publish the event to functions – change key manually

- Greater transparency

- Full context of the events will be available and transparency in the authentication of payloads

- Cloudevents .io

- Supporting various publishers who describe events in a different way. Similar Cloud events, the upcoming Event Grid will extend their support to publishers.

The session ended with a demo of event grid which converts image stored in blob storage into container instance with unique id appended.

Hacking Logic Apps – Derek Li

After a quick coffee break, we are back on an amazing session with Derek Li who is a Program Manager in Logic AppsHe is accompanied by Shae, also an Engineer in Logic Apps team.

Derek focused on brand new content in Azure Logic Apps which people have never seen or used in earlier sessions. The session was entirely based on new things to do, tips & tricks in Azure Logic Apps.

The new features announced for Logic Apps are;

- Inline Code

- What’s new in VS Code for Logic Apps

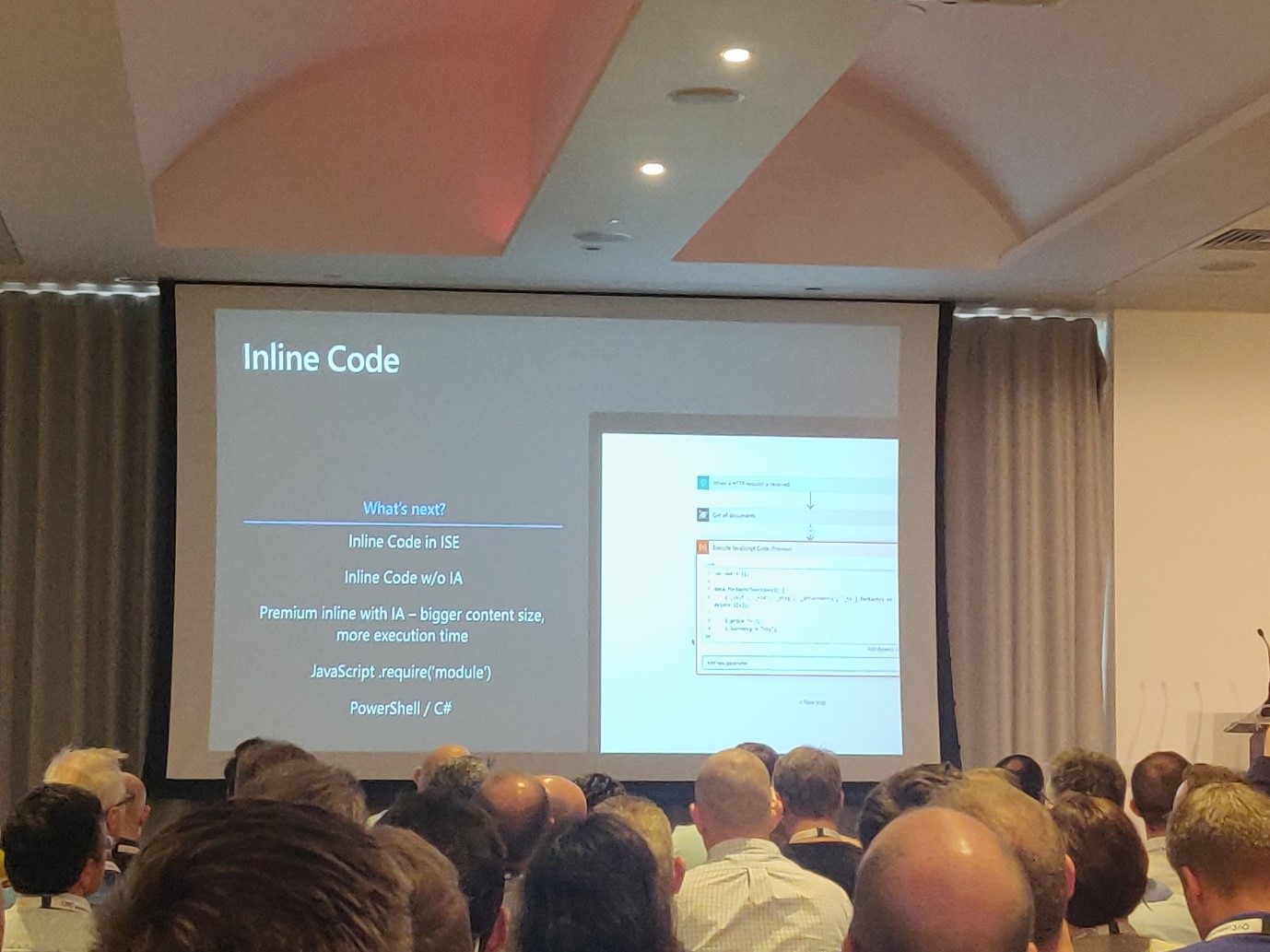

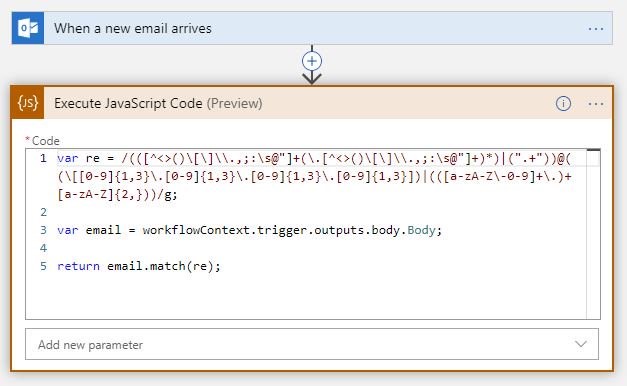

Inline Code

Inline code is a new feature which is in Public preview now, which lets you run code inside your Logic App orchestration or workflow. The main advantage here is we don’t need to worry about the execution context. You can easily pick the reference from the workflow context and consume data from previous actions as well.

- Derek Explained Inline Code with a sample scenario, where a List of Email addresses can be extracted using a simple regex code in Javascript from a very large message body.

- If your inline code takes more than 5 seconds, reconsider to use an Azure Function. Don’t mess up your logic apps with loads of inline code.

- For now, only JavaScript is available. More languages are coming soon.

What’s new in VS Code for Azure Logic Apps

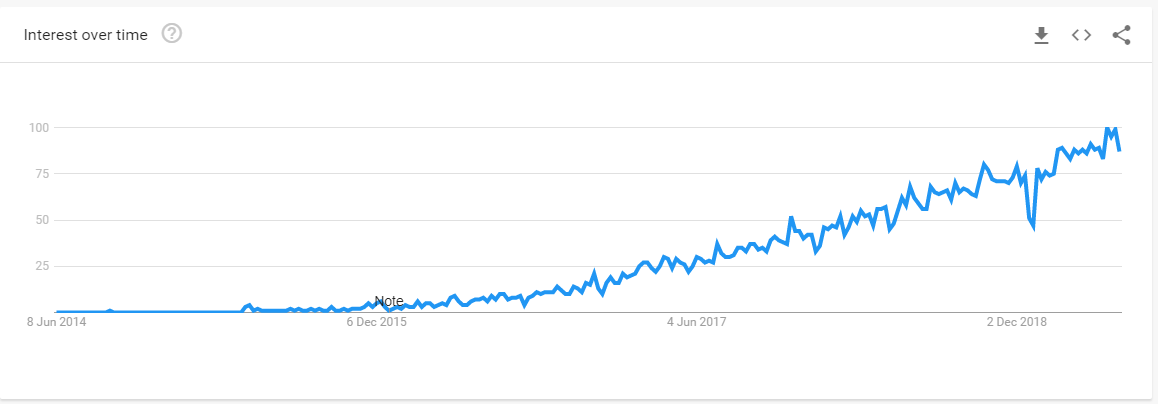

Visual Studio code has become one of the most popular code editors for every programmer out in the world.

Over the years it has got a ton of improvements and Extensions which let VS Code stand out from rest of the IDE’s out in the world.

Derek surprised the crowd by stating, there’s a new update to the Azure Logic Apps extension in Visual Studio code right now (ᵔᴥᵔ) and the first 10 people with an approved PR in the GitHub repository to get free goodies from MSFT.

What can you do in VS Code now?

- Create logic app project from vs code

- Add existing logic apps / create new

- Arm deployment template automatically created

- Azure DevOps integration

Derek also demonstrated where he created a brand new project from Visual Studio code and then deployed the Logic App to Azure with the help of DevOps CI/CD.

Tips & Tricks

Some workflow has some conditions to be validated before proceeding in the orchestration. So, there’s a new Action called Trigger Condition which can let you check the conditions within the trigger itself.

- This reduces the complexity of adding more actions and polluting the Designer workspace.

- This will not clutter the run-history just because the condition is not passed which will reduce the cost.

Sliding Window Trigger

There’s a new trigger called Sliding Window Trigger. To regularly run tasks, processes, or jobs that must handle data in continuous chunks, you can start your logic app workflow with the Sliding Window – Schedule trigger. If recurrences are missed for whatever reason, this trigger processes those missed recurrences.

Some of the most commonly used scenarios are;

- Run immediately and repeat every n number of seconds, minutes, or hours

- Delay each recurrence for a specific duration before running

- You can also output the start and end time, which can be used in your scenarios.

Things in the backlog for considerations

The improvements which are being carried out which will be rolled out soon for public use. If you are aware of ARM Templates, you must know that managing the parameters of an ARM template is a nightmare.

- Parameterizing Logic App templates for ARM

- Better Connection Management

- Editable designer view for VS Code

- Better token picker without changing the browser window

As always, any session ends with an awesome set of questions from the people. Now let’s jump into the Q & A for this session.

Q: Do you know which version of PowerShell is supported in inline code?

A: No, there’s no support for other languages except JavaScript. If you want to add more languages please let us know your use case, we will consider bringing them.

Q: what’s the reason for 5 seconds timer in Inline Code?

A: To choose between Azure Functions / Inline Code. If your code runs more than 5 seconds or does a long operation by calling methods you must write it in Azure Functions.

Q: In case you say my code completes in 7 seconds, do I still need to use Azure Functions?

A: Please let us know your use case, so we can tweak it.

Q: Is there going to be a mapper for logic apps to separate integration accounts

A: We are looking into it the XSLT, XML transformation and validations are coming soon, and will available the VS Code and Visual Studio 2019 soon.

Q: How do I Export the complex large, logic app – share with people for presentation purposes

A: We are working on designer experiences, so stay tuned.

API Management: deep dive – Part 2 – Mike Budzynski

Mike Budzynski, Program Manager – API Management provided the latest updates on API Management in continuation to the previous session from Miao Jiang.

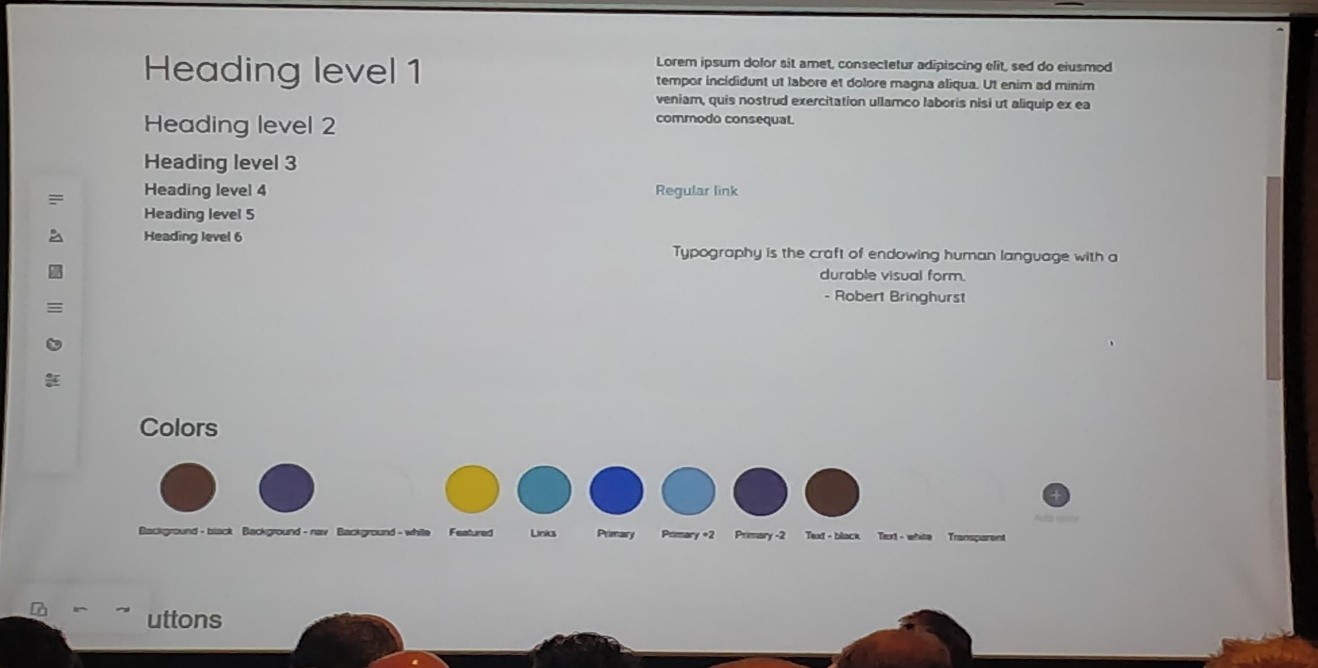

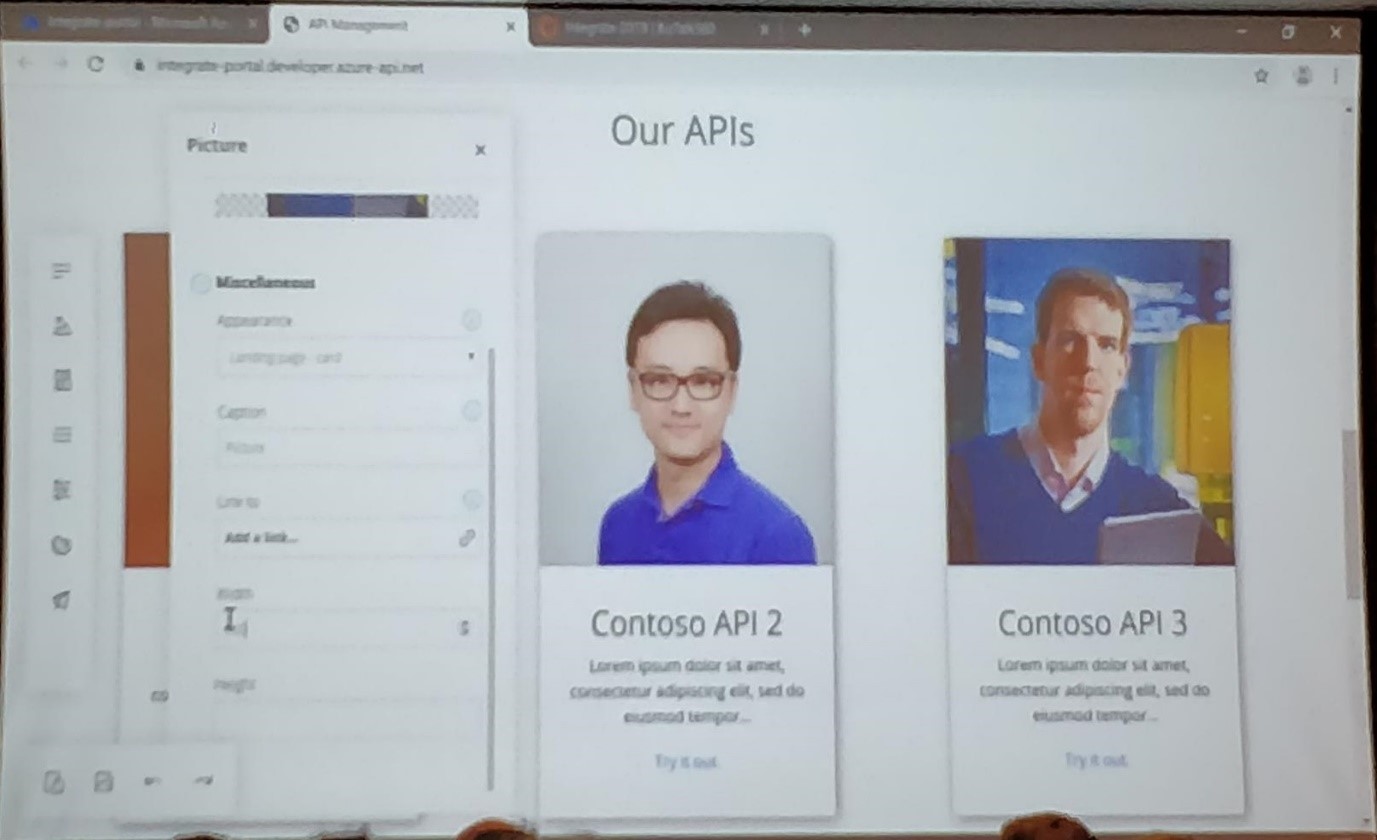

New Developer Portal preview which is to be launched on 12th June.

The new developer portal is being built from scratch based on both user and market research, latest development and web design trends.

Key Stacks of new Developer Portal

- The new development portal is implemented with JAMstack technology which stands for Javascript, APIs, and Markup. As a result, the portal will have better performance, more secure and scalable.

- The new developer portal is by default made modern looking which reduces the customization that needs to be done.

- The new developer portal is more customizable with a lot of features.

- The new development is Open-source available on a public Git Repository.

- It comes Built-in with the API Management instance.

- It can be self-hosted. This becomes very easy since it is implemented using JAMstack.

- The portal is DevOps-friendly, all the deployments can be automated, migration, etc.

Mike then presented a demo by making his developer portal look like the Integrate2019 website. In the demo, he explained the latest set of features in the new developer portal.

He explained maintaining different versions of APIs and editing the layouts in his demo.

He then gave updates on the general availability of the new developer portal which can be expected by this Autumn.

To know more about the new developer portal, refer the link https://aka.ms/apimdevportal

Making Azure Integration Services Real – Matthew Farmer

Integration scenarios

There are four major integration scenarios;

- Application to Application

- Business to Business

- SaaS

- IoT

Each one of these integration scenarios come with many challenges. The following points are the key challenges you may face while doing integration;

- Each system would have different interfaces, API’s, data sources and formats.

- Some of the services are Service-oriented and others are distributed.

- The services may reside either in the cloud or on-premise.

The solution to the above-mentioned problem is IPaaS – Integration Platform as a Service. Over the last two years, companies are moving towards IPaaS. It provides numerous benefits besides making integration easier.

Four Major Integration Components

There are four major integration components which are essential to building solutions. The four major components are as follows;

APIs – API Management: Publish your APIs securely for internal and external developers to use when connecting to backend systems hosted anywhere.

Workflows – Logic Apps: Create workflows and orchestrate business processes to connect hundreds of services in the cloud and on-premises.

Messages – Service Bus: Connect on-premises and cloud-based applications and services to implement highly secure messaging workflows.

Events – Event Grid: Connect supported Azure and third-party services using a fully managed event-routing service with a publish-subscribe model which simplifies event-based app development.

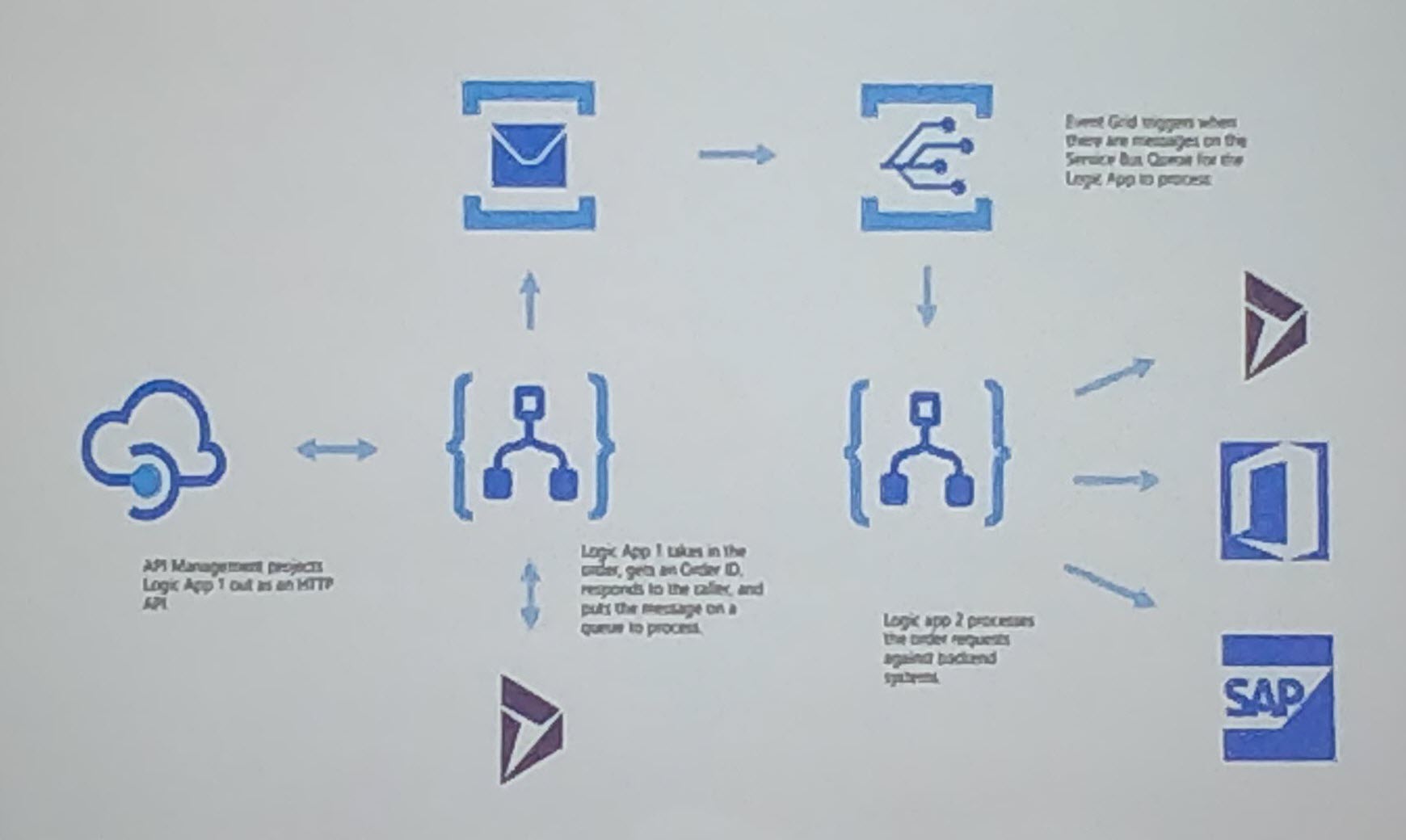

Scenario: Order processing

Initially, there is an API Management which triggers a Logic App. The Logic App processes the order and sends the order information to the Service Bus Queue. On receiving the message in the Queue, the Event Grid listens to this event and triggers another Logic App. Here, the Event Grid acts as the glue between Logic App and the Service Bus Queue. Having separate Logic Apps for different business scenarios gives flexibility. Now, the Logic App will perform the defined operations and sends the order information to a different system like SAP, Office365, and Azure.

How they work together

API First: Customer build a library of reusable APIs, which can be invoked both within their organization and between partner and applications.

Orchestrator of system: Logic Apps can be used to build flexible, reusable flows. The user can use tooling that makes process easy to understand and manage.

Message stores as Infrastructure: Storing messages becomes a key part of application design, bringing scale, reliability, and agility.

Event Driven model: One can build applications and integration using events, creating a reusable and efficient model.

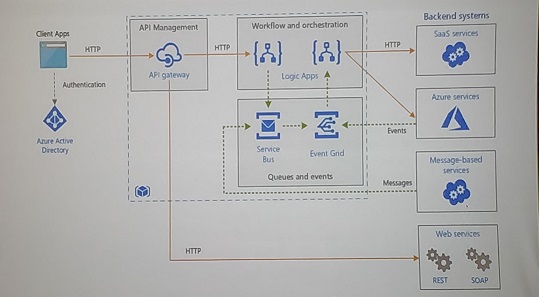

Enterprise Integration on Azure

This reference architecture (https://aka.ms/aisarch) uses Azure integration services to orchestrate calls to enterprise backend systems. The backend systems may include software as a service (SaaS) systems, Azure services, and existing web services in your enterprise. Azure Integration Services is a collection of services for integrating applications and data. This architecture uses two of those services: Logic Apps to orchestrate workflows, and API management to create catalogues of APIs. This architecture is enough for basic integration scenarios where the workflow is triggered by synchronous calls to backend services. A more sophisticated architecture using queues and events build on this basic architecture.

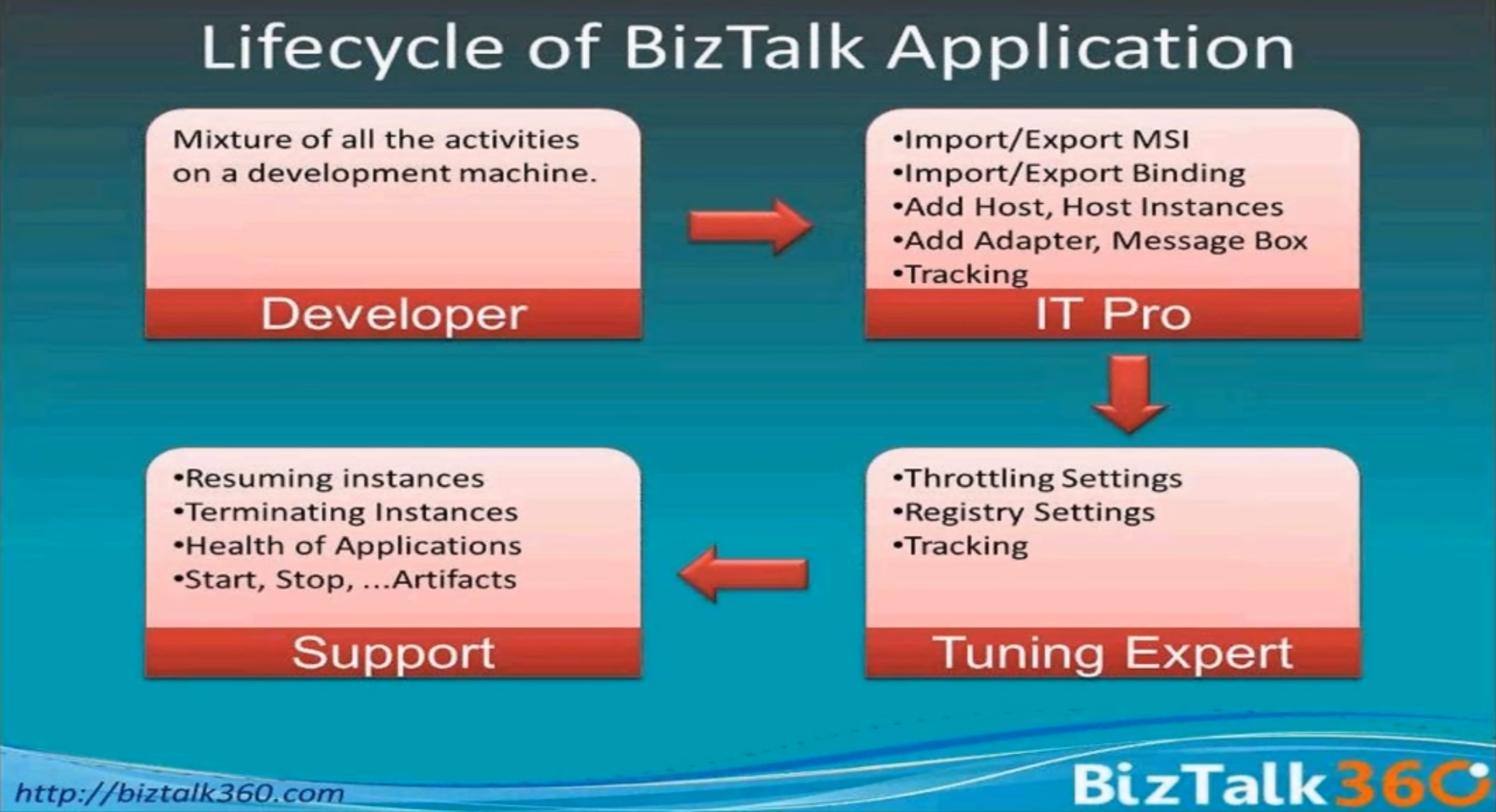

From BizTalk to Azure Integration Services

Many assets can be transferred from BizTalk to Logic Apps easily!

- Schema

- Maps

- EDI Agreements

The above assets can be moved to a Logic Apps Integration account. Orchestrations and pipeline can be re-modeled in Logic Apps.

Tricky cases that are hard to move:

- BizTalk implementation with a huge code base

- Lots of rules engine can sometimes be harder

Azure Logic Apps vs Microsoft Flow, why not both? – Kent Weare

After one and half days of attending to sessions from Microsoft team, Kent Weare started off his session explaining about “Azure Logic Apps vs Microsoft Flow, why not both?”. This session was mainly focused to help enterprises to choose Azure Logic Apps or Microsoft Flow. There are over 275+ connectors available in flow, both from Microsoft and 3rd parties.

Some highlights of Microsoft Flow and Logic Apps Features;

Microsoft Flow Features

- Integration Software as a Service

- Azure Subscription not required

- Product group resides in Dynamics Organization at Microsoft

- License entitlement available through Dynamics 365 and Office 365

- Part of Power platform (PowerApps, Power Bi, Microsoft Flow)

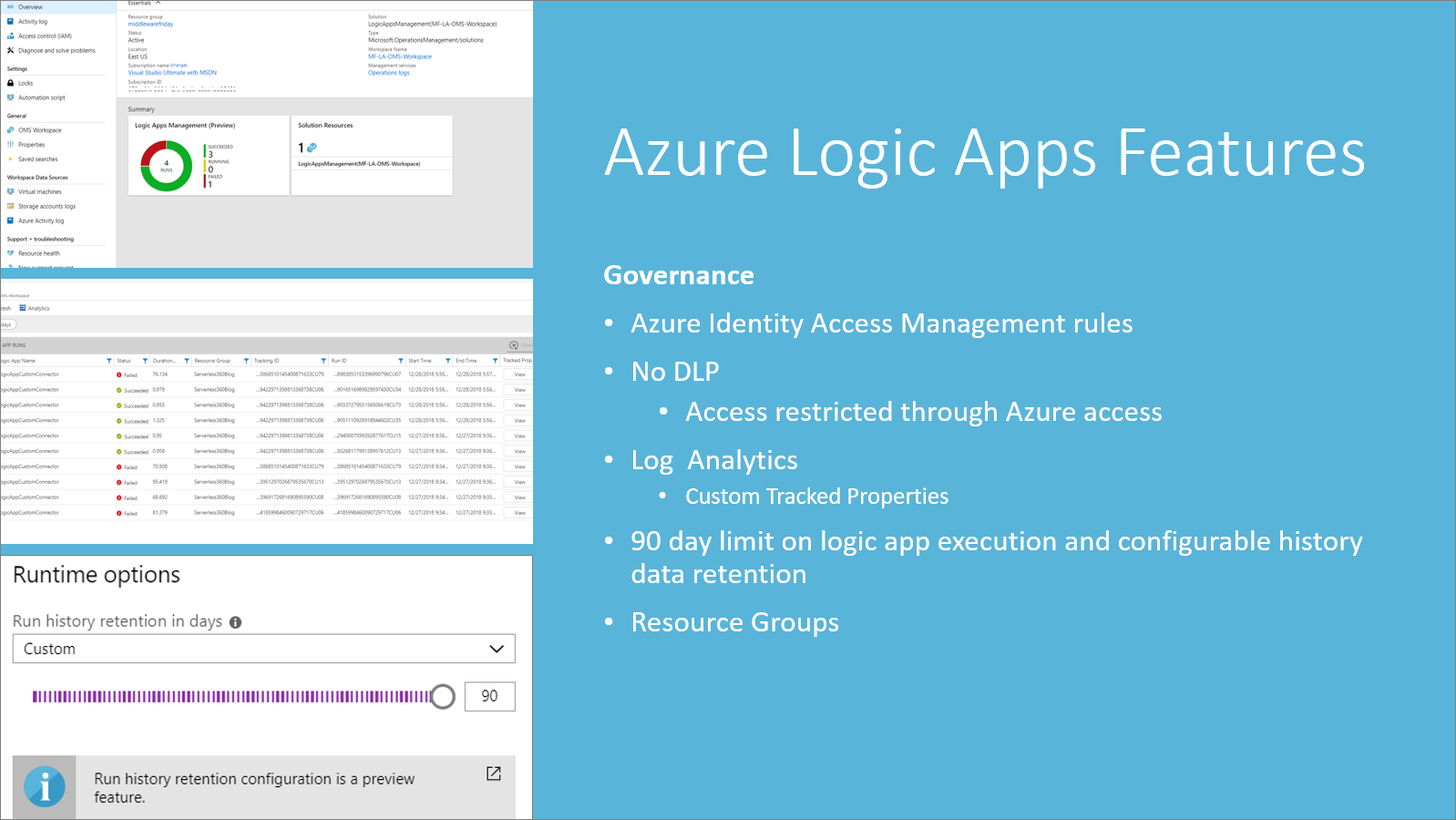

Azure Logic Apps Features

- Over 275+ Connectors available

- Enterprise Connectors

- SAP

- IBM MQ

- AS2

- EDI FACT

- Custom Connectors

- Log Analytics

- No DLP

Then he started to explain Microsoft Flow Vs Logic Apps. Microsoft Flow targets the Citizen Integrator, Logic Apps targets the Integration Developer which will be usually involved in more complex integration solutions and development practices, being part of a larger solution.

Moving on, he explained the key differences between Flow and Logic app. He shared his experiences adopting these services at InterPipeline. We can monitor Azure Logic Apps through Azure Monitoring, Turbo360 (a 3rd party monitoring tool). Then he presented a demo on Microsoft Flow using Microsoft Forms and Power BI.

He also mentioned about governance features in Azure is different from those provided in Microsoft Flow. In part, due to the personas that are typically involved in building solutions and that getting access to Azure is an ‘opt-in’ activity, whereas all Office365 licensed users have maker privileges in the default Microsoft Flow environment.

If you were looking for a definitive answer to which tool is “better”, you won’t find it in this session. Ultimately, both tools provide tremendous benefits to an organization. The true winner is an organization that identifies how to use each tool to deliver value to the organization. At the end of the day, people should consider the desired business outcomes first, then figure out what is the best tool that helps achieve those outcomes, while putting their personal biases aside.

As a primer to this session, Kent has already published some key points towards this section in this blog post: Azure Logic Apps vs Microsoft Flow, Why Not Both?

Your Azure Serverless Management simplified using Turbo360 – Saravana Kumar

Your Azure Serverless Applications Management and Monitoring simplified using Turbo360

1.5 days of Integrate had loads of valuable information to take away on Azure Serverless services. First-hand product updates from the Microsoft team. Excellent session from Kent to enable the right service. Next in the line was a session on smart solutions from Turbo360 to better manage the Azure Serverless Applications built using Microsoft Azure Serverless services. The session was presented by the captain himself, Saravana Kumar. Throughout the presentation, Saravana citied real time examples acquired from his experience which the crowd unanimously agreed on. This article briefly covers the session.

Saravana very clearly set the agenda at the beginning of the session itself;

- Management of your Serverless Apps

- Improving DevOps for your Serverless Apps

- End-to-End tracking for your Serverless Apps

- Customer Scenarios

The discussion kick-started with the question ‘What is a Serverless Application?’. Azure services like Service Bus, Logic Apps, Function Apps, Event Grid, APIM and much more are put together just like LEGO blocks to construct the application.

The out of box solutions indeed come up with certain challenges in managing them;

- Hard to manage

- Complex to diagnose and Troubleshoot

- Hard to secure and monitor

From Biztalk to Azure the challenges remain the same,

Stakeholders involved in managing the application either in Biztalk or Azure will have a different persona with various needs. Biztalk360 was created as a simple tool for the support to manage the Biztalk Server. A similar tool for Azure Serverless application management is Turbo360.

As Saravana rightly mentioned, with more power comes more responsibility, Turbo360 is carefully crafted with capabilities to complement the Azure portal in managing Azure Serverless applications.

In a nutshell, below are the key solutions those Turbo360 offers to better manage Azure Serverless applications,

Management of your Serverless Apps

- Composite Applications & Hierarchical Grouping

- Service Map to understand the architecture

- Monitoring under the context of application

- Security defined at the application level

Tools from Turbo360 for better DevOps

Saravana demonstrated on the following automation options in Turbo360 those would enable do better automation.

- Templated entity creation

- Auto-process leftover messages

- Auto-process dead letter messages

- Remove storage blobs on condition

- Replicate QA to Production

- Detect & Autocorrect entity states

Business Activity Monitoring in Turbo360 comes in with an answer for the most faced challenge of a support person, “Where is the message”?

Saravana completed the session citing few interesting customers needs to be achieved using Turbo360.

- Ensure 15k client devices are active: Data Monitoring is the solution

- Monitoring solutions specific to different teams: Composite application along with monitoring configuration come into rescue

- Auto Reschedule Doctor’s appointments: Automated dead letter processing will help achieve this

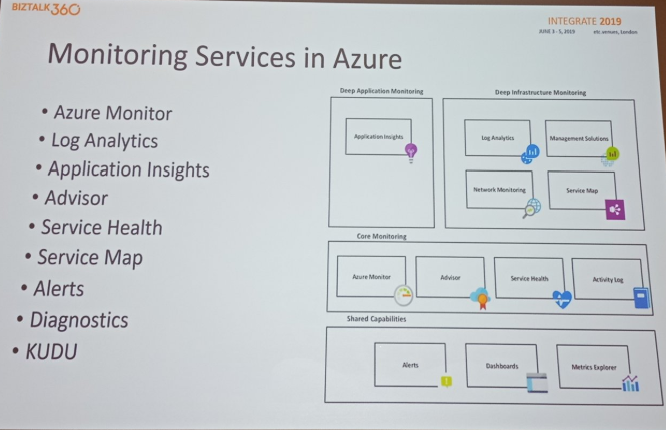

Monitoring Cloud- and Hybrid Integration Solution Challenges – Steef-Jan Wiggers

Steef-Jan Wiggers, Cloud Editor @InfoQ started his session by explaining about the integration world. There are a lot of challenges being faced when we build Pure cloud solutions or hybrid integration solutions. Then he jumped onto explain about hybrid integration solution using some real-time scenario.

Whenever we build a solution, we should able to monitor it effectively. So, there are different types of monitoring challenges faced by the azure ports such as;

- Health Monitoring

- Availability monitoring

- Performance monitoring

- Security monitoring

- SAL monitoring

- Auditing

- Usage monitoring

- Application logs

- Business monitoring

- Reporting

However, Azure comes up with a set of monitoring activity tools such as Azure monitor, Log Analytics, Application Insights, Power BI, etc. to monitor their entities in an efficient manner.

People – Process – Products

People

Whenever we build this kind of hybrid solutions in an organization, we should support our people training and upskilling it, so they are prepared to support our solutions later. And also, these people should be talented and passionate enough to learn about these hybrid integration solution products.

You can enrich people knowledge as shown in this image;

Process

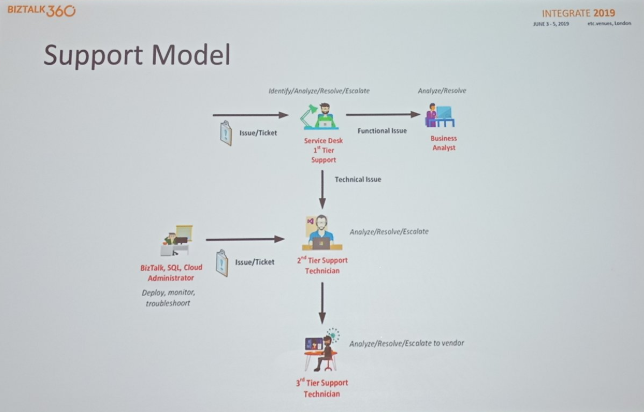

Whenever you build these solutions, make sure you have well-defined processes and your team should understand their roles and responsibilities.

Products

Based on your requirements, understand the products and tools available and make sure your have the right tool for the right job. There are various powerful tools available where you can involve them in your hybrid integration solution effectively. Some of the tools are,

- Turbo360

- BizTalk360

- Atomic Scope

- Invictus Framework (BizTalk, Azure)

- AIMS

- NewRelic

- DynaTrace etc.

He started to explain about Turbo360 product like how it effectively handles the monitoring and operations for around 16 azure entities and it’s getting bigger day by day. Additionally, you’ll get an end to end visibility to your business process by using this product.

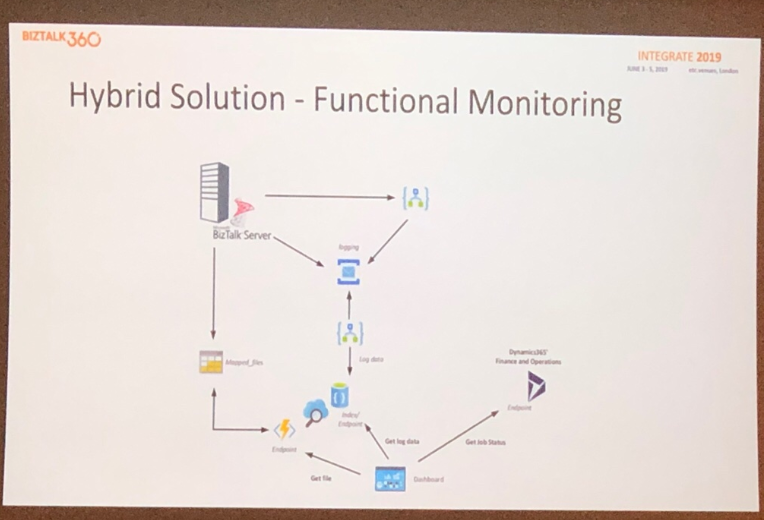

Functional and Technical Monitoring

Functional monitoring enables the user to monitor whether the entities are up and running without any issues. And also, when it comes to a hybrid solution, say it involves BizTalk Server, Function Apps, Dynamics 365, etc. all the logs of these entities are very useful to determine the state of the respective entity.

Technical monitoring helps you to improve the performance and health of entities involved in a hybrid integration solution.

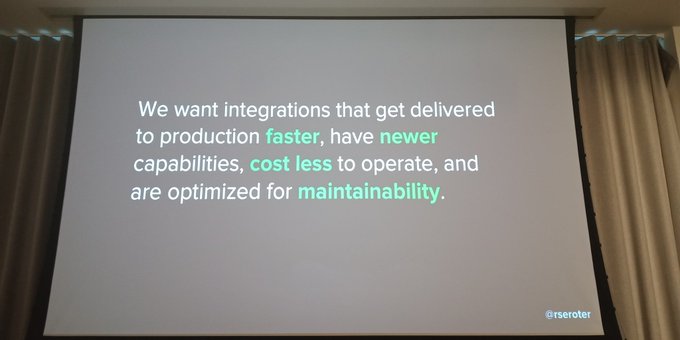

Modernizing Integrations – Richard Seroter

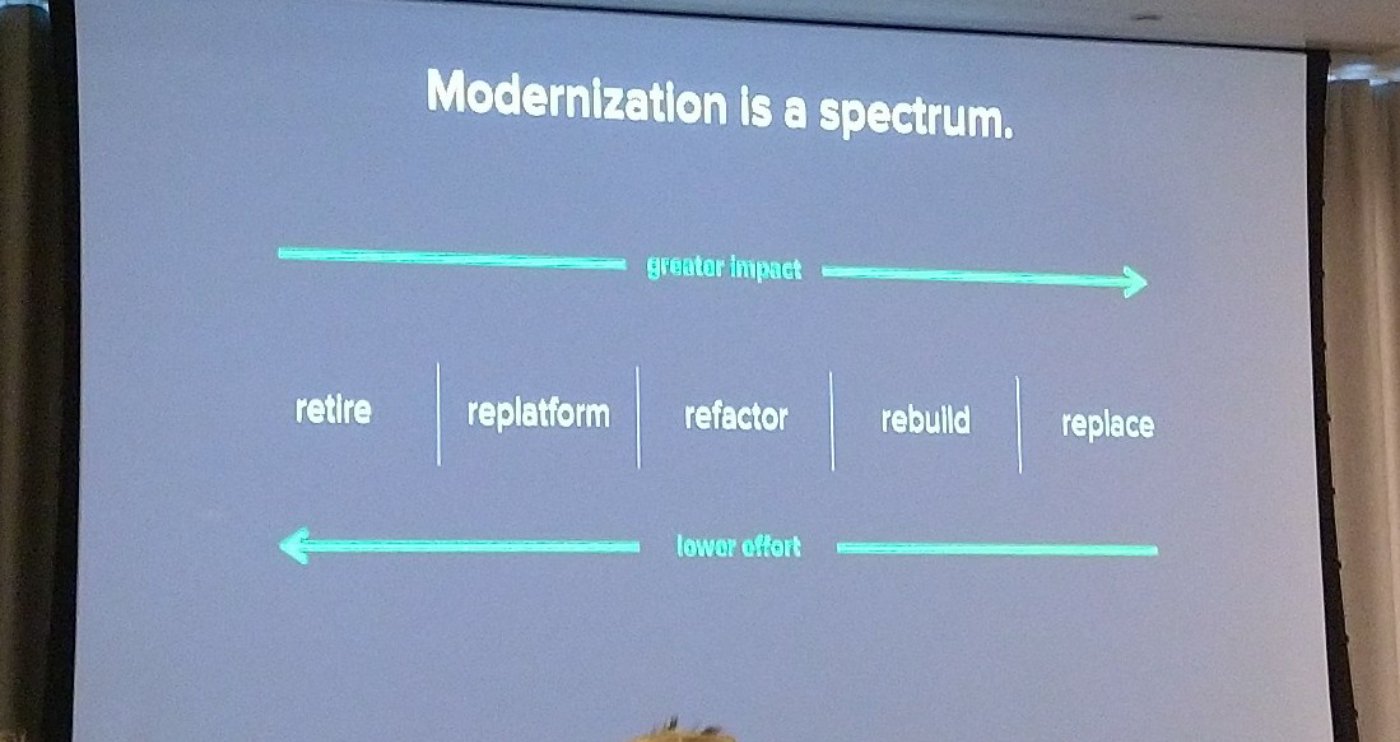

Richard Seroter, Vice president of Product Marketing at Pivotal, started the session by Explaining about the Modernization with some terms

Retire, Replatform, Refactor, Rebuild, Replace. He advised that the Impact will greater when following the above terms in forwarding direction and lower when following in backward direction.

Tools like BizTalk Servers, WCF, Microsoft Azure Service bus and SSIS are following this spectrum that he showed. On the go, He showed analytics on “What you asked to create?” and it shows people are asking for fewer IOT stuff and more application stuff. He spoke about his research on application modernization. He also spoke about what is needed in Integration.

He insisted on some considerations for modernizing integration.

- Evaluate System Maturity: Make different choices based on system and problem maturity, Do Experiments and commodity tech should be used

- “Unlearn” what you know: Don’t default to XML formats, less centralized data storage, and processing, move biz logic into endpoints, Be integration enables Vs gatekeeper and reduce dependency on windows

- Introduce new components: Public cloud, Managed services like serverless, Functions, Event brokers, API gateways, service meshes, and protocols.

- Uncover new endpoints and users: SaaS and cloud hosted systems, Custom and commercial API endpoints, Ad–hoc subscribers to data streams and Citizen Integrator.

- Audit existing skills: Teams skills, identifying skills and Assess the cost

- Upgrade interaction patterns: Introduce event Thinking, evolve from sense and change how you assess and interact with production environments.

- Add automated delivery: On-demand environments for developers, Continuous integration pipelines and Automated deployment.

- Choose a host location: Rehosting, consider proximity and evaluate usage.

- Decide how to manage it all: Moving from monolithic to micro-platforms, Create a consistent approach and centralized management.

He also insisted on some practices for modernization on;

- Content-based routing.

- De-batching from a database.

- High-volume data processing

- Replaying data stream

- Sophisticated business rules

- Stateful workflow with correlation

- Complex data transformation

- Integrating with cloud endpoints

- Throttling and load leveling

- Strangling your legacy ESB

- Getting Integration into production

- Build integration teams

And finally, he suggested taking a clear decision about approaches and technologies for your portfolio.

Cloud architecture recipes for the Enterprise – Eldert Grootenboer

Cloud architecture recipes for the Enterprise

Last session for the day, here is Eldert Grootenboer presenting interesting recipes for Enterprise Cloud Architecture.

He started with explaining the adoption of cloud computing from On-Premises where the running and managing of infrastructure are managed by the enterprises. With IaaS, no need to care about Infrastructure the hardware and software upgrades like OS and patches are taken care of. We need to focus on setting up the platform and proceed from there.

It is from PaaS, where the real cloud journey starts. We just need to focus on business needs and build solutions. This where Serverless comes into picture where we can focus purely on business needs and not worry about other issues.

He suggested on deciding the boundaries and guidelines before we implement the solution:-

Architecture Principles – decide on what architecture to be implemented (there are many like TOGAF, SAFe, create your own.)

Dialogue with Business units, Architects and everyone involved – Make sure the requirements are understood, architecture is understood, and everyone is on the same page.

Advised on the advantages SaaS before PaaS before IaaS – try to buy what is available. Don’t try to build. If you want to build your own solution, try to leverage PaaS.

Event Driven – Make sure a trigger to make something happen

Loosely coupled – make sure to decouple each system. Make sure you send events between the applications.

Think about Scalability – Cloud provides infinite scalability.

Think about DevOps strategy – Think about where the Source code should reside, how to take the solution to production, internal culture.

Integration Patterns – Make sure you leverage these patterns

Middleware – do not use spaghetti solutions. Make sure applications are exposed to middleware.

Best of Breed – Think about at your own solutions and problems. Just don’t consider what other use just because they look attractive.

Security and Governance – Make sure things are secure. Platform is monitored. Make sure something is not initiated without notice. Think about the cost.

While choosing architecture. Try to explore Azure Components – Serverless Platform. There are plenty of services like – Logic Apps, Event Grid, Functions to execute some code, Containers which can scale up and scaled own easily, App Insights for monitoring.

For development, there are Visual Studio, VS Code light weight, DevOps, Remote debugging tools, Code Sharing platforms.

Better Together – Make sure to use these services together. They provide endless possibilities. Create your own menu – look at your scenarios and choose what services will suit your needs. Expand when needed, not because you can.

Eldert shared some of his customer experiences:

Every customer has own story and criteria. Understand Customers wish. Propose solution with a variation. For one of the solutions, one of the clients preferred – Microservices that are small, decoupled. They are scalable. They wanted some insights and Transformation capabilities.

For this purpose, Azure Storage to upload files, Event Grid to listen to the files arriving at the storage, Functions to transform and Cosmos DB to store. This provided a serverless and event-driven solution for them.

For another customer requirement which is a different environment that demanded Cross Cloud capabilities, support to legacy and new services compatibility and support to various development stacks.

In this case, Azure Kubernetes were chosen – they are container-based services. Kubernetes can run on Azure, Google, and AWS. Full control and language support.

Thinking about Cloud Native?

Containers are IaaS, not leveraging full capabilities of the cloud. Cloud Native starts from PaaS.

For another customer who wanted a workflow to be implemented to manage employee onboarding that involves assigning assets, fill out the form and forward to HR. They wanted to automate these. The solutions should be easy and agile. Combination of SaaS and PaaS with monitoring and logging.

The solution involved Sharepoint, Powerpoint, Outlook and leveraged Logic Apps to orchestrate this workflow.

Thinking about Dev Ops?

It is not technical, it is a change in the way how business must work.

Service Abstraction

One of the solutions included Service Bus, Logic Apps, Powershell, etc. They need to abstract through API. They want only to end to be exposed, should not worry about managing SaaS token, Web token, etc. Wanted real-time Insights – who is accessing, what are the load, what are the errors. API Management suits this need where policies can be created. Caching can be leveraged using Redis Cache and easily implement security.

Another solution involved Web Apps that have to be exposed through client and have load balancing. Security to prevent vulnerabilities like SQL injection. SSL Offloading. Application Gateway provides a solution with load balancing, routing the web application traffic and make sure websites are protected.

Takeaways

- Make sure scenarios are captured – Understand Customer asks and Business Asks. Understand how to do this.

- Have good architecture – governance and security, available for everyone

- Cost efficient – don’t work for cheapest, investigate architecture to make sure using cost-efficient methods

- Cloud computing- a lot of new opportunities and possibilities.

Some interesting links in connection to this session:

Azure API Management DevOps Resource Kit

API Management CI/CD using ARM Templates – Products, users and groups

Exposing Azure Services using Azure API Management

Integrate 2019 Day 1 and Day 3 Highlights

Integrate 2019 Day 1 Highlights

Integrate 2019 Day 3 Highlights

This blog was prepared by

Arunkumar Kumaresan, Balasubramaniam Murugesan, Ezhilarasi Chezhiyan, Hariharan Subramanian, Kuppurasu Nagaraj, Nadeem Ahamed Riswanbasha, Nishanth Prabhakaran, Pandiyan Murugan, Suhas Parameshwara.