In this blog post, we will discuss the Logic App Engine or Runtime responsible for executing your workflows you create with the Visual Designer. We will discuss both the Visual Designer and the runtime behind based on a transcript of Kevin Lam presentation of Logic Apps in depth at Integrate 2018. Also, this post intends to provide documentation around the Logic App engine as there is no clear documentation available today.

Introduction

Logic Apps was released in July 2016 for the public and has since then evolved into a leader in the integration Platform as a Service (iPaaS) space in two years. Moreover, Logic App succeeded Microsoft’s first attempt at offering an iPaaS in the Cloud – BizTalk Services. It later received some updates and branded as Microsoft Azure BizTalk Service, MABS for short – a version 2 of BizTalk Services. However, MABS was deprecated, and both the VETER pipelines and the EDI/B2B functionality Microsoft moved to Logic Apps.

The successor to MABS is Logic Apps – based on a different model

First, it is Platform as a Service offering, where you do not have to worry about managing any infrastructure – unlike MABS, you do not have to choose any SKU’s. The infrastructure is abstracted away, and you can build flow using triggers and actions by creating a workflow definition. Furthermore, Logic Apps are interoperable with other Azure services like Service Bus, Azure Functions, Event Grid, and API Management. Besides these services, Logic Apps also offers various connectors for other Azure Services and SaaS solutions. In case a connector doesn’t exist, you can build one yourself.

Developers can build a Logic App, i.e. design workflow using the designer in the browser or Visual Studio – leveraging the Azure Logic Apps Visual Studio Tools. You can view a Logic App itself as a logical container or host of a workflow definition – when running the flow, it will consume resources on the Azure Platform (resources are abstracted away from you).

With the designer, you can define a business process workflow, or integration workflow by choosing one of the many predefined templates or an empty one. The predefined templates offer something like ‘Peek-lock receive a Service Bus message and complete it’ or ‘Peek-lock receives a Service Bus message with exception handling’. Besides the pre–built template, you can start with an empty template and commence dragging a trigger and actions yourself to the canvas – each trigger, action, condition, and so you place in the Visual Designer is captured as JSON. Furthermore, in the code-behind, you can examine the JSON.

The Logic App Designer

The Visual Designer is written in TypeScript/React and hosted in an iFrame in the portal. Furthermore, the designer in the Azure Portal is the same designer that is available through the Azure Logic App Tools for Visual Studio. Hence, it’s an iFrame in Visual Studio as well, hosting the same designer – a developer will have the same experience in the portal as within Visual Studio.

The Logic App designer uses the OpenAPI Swagger to render the inputs and outputs. Furthermore, the designer uses that Swagger to understand how to generate the cards as well as the properties for each of those APIs of operations that you as a developer have. Next, the designer renders the tokens for the outputs from each of the actions that get executed. Lastly, the designer then interprets the design objects that you put onto the canvas and then generates JSON.

You can examine the JSON in code view – in the portal you can click “Logic App code view” and see the JSON Domain Specific Language. In the code view, you can edit the JSON and switch back. However, the designer will render as long as you’ve typed in correct JSON, as the designer represents that JSON.

The Domain Specific Language (DSL) for Logic Apps

You can view Logic Apps as a workflow as a service in Azure; however, it may not behave as regular workflow or workflows you might have implemented with Windows Workflow Foundation (WF) in the past. A typical workflow acts like first do step A, then step B, then step C, and you can have conditions and loops, and so on – it’s a forward-chaining type of process. Logic Apps, however, do not behave in that manner – you might experience it that way when creating a Logic App.

Logic Apps is a job scheduler with a JSON-based DSL describing a dependency graph of actions – an inverse-directed graph. What Microsoft Pro-Integration Team responsible for this service have done is that a user might feel like you’re doing step A, step B, then step C. However, what they have done is an inverse dependency on the previous step. Therefore, if you look at the code view, you will see that there’s a RunAfter step. And it says RunAfter step A, right? Step B runs after step A – thus then when you create your logic app, you have the sequence of actions.

"definition": {

"$schema": "https://schema.management.azure.com/providers/Microsoft.Logic/schemas/2016-06-01/workflowdefinition.json#",

"actions": {

"Condition": {

"actions": {

"Send_an_email": {

"inputs": {

"body": {

"Body": "Add extra travel time (minutes): @{sub(variables('travelTime'),15)}",

"Subject": "Current travel time (minutes): @{variables('travelTime')}",

"To": "sj.wiggers@gmail.com"

},

"host": {

"connection": {

"name": "@parameters('$connections')['outlook']['connectionId']"

}

},

"method": "post",

"path": "/Mail"

},

"runAfter": {},

"type": "ApiConnection"

}

},

"expression": {

"and": [

{

"greater": [

"@variables('travelTime')",

15

]

}

]

},

"runAfter": {

"Initialize_variable": [

"Succeeded"

]

},

"type": "If"

},

"Get_route": {

"inputs": {

"host": {

"connection": {

"name": "@parameters('$connections')['bingmaps']['connectionId']"

}

},

"method": "get",

"path": "/REST/V1/Routes/Driving",

"queries": {

"distanceUnit": "Mile",

"optimize": "timeWithTraffic",

"travelMode": "Driving",

"wp.0": "21930 SE 51st St, Issaquah, WA, 98029",

"wp.1": "3003 160th Ave, Bellevue, WA, 98008"

}

},

"runAfter": {},

"type": "ApiConnection"

},

"Initialize_variable": {

"inputs": {

"variables": [

{

"name": "travelTime",

"type": "Integer",

"value": "@div(body('Get_route')?['travelDurationTraffic'],60)"

}

]

},

"runAfter": {

"Get_route": [

"Succeeded"

]

},

"type": "InitializeVariable"

}

},

"contentVersion": "1.0.0.0",

"outputs": {},

"parameters": {

"$connections": {

"defaultValue": {},

"type": "Object"

}

},

"triggers": {

"Check_Travel_Time_every_week_day_morning": {

"recurrence": {

"frequency": "Week",

"interval": 3,

"schedule": {

"hours": [

"7",

"8",

"9"

],

"minutes": [

0,

15,

30,

45

],

"weekDays": [

"Monday",

"Tuesday",

"Wednesday",

"Thursday",

"Friday"

]

},

"timeZone": "Pacific Standard Time"

},

"type": "Recurrence"

}

}

}

What the JSON DSL allows you to have is – no dependencies and then have everything run in parallel. Moreover, every action is essentially a task that runs in the background – allowing to be a very highly parallelizable engine so that your workflows can run in parallel. To conclude, keep that in mind as you write your logic apps, it’s a dependency graph, not a step one and a step two processses!

Execution of a Logic App

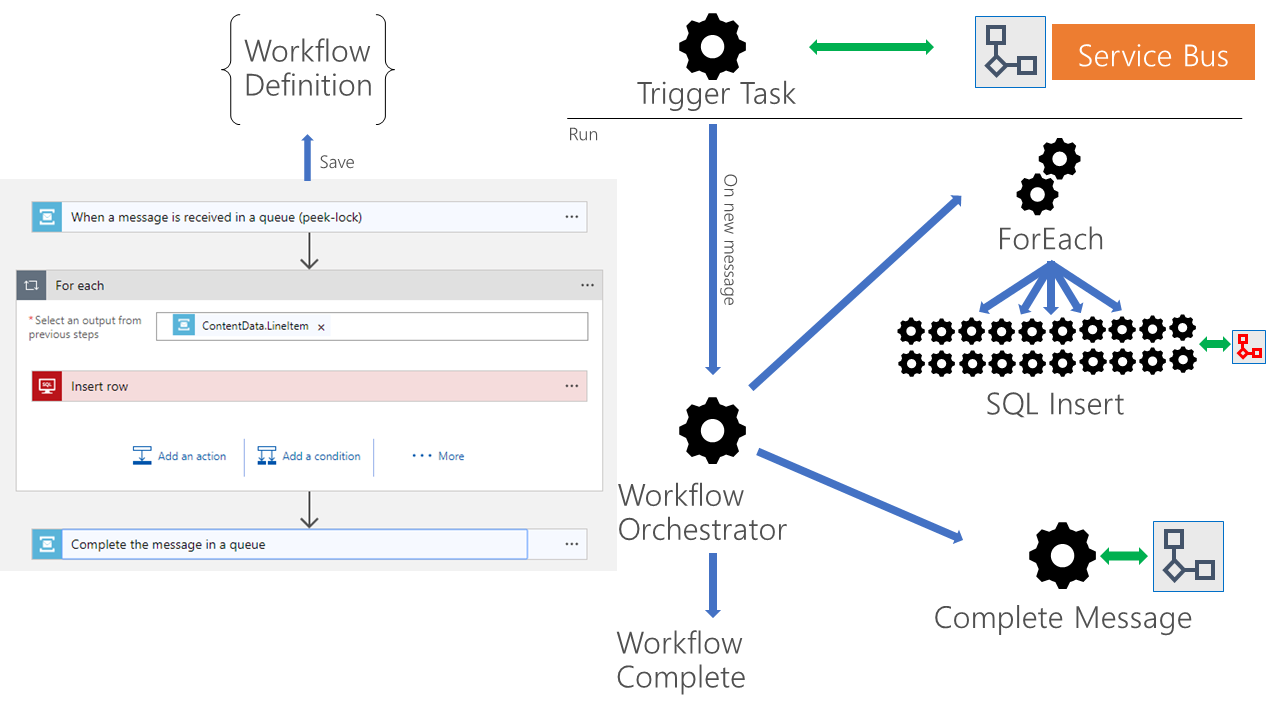

How does a Logic App run when, for instance, it is triggered when a message arrives on the service bus, and each message has an array of objects? The answer is what is depicted in the picture below. The Logic App will go through the object using a ForEach, and each object is stored in a SQL Azure database (Insert Operation). Next, after looping through the objects is done, the Logic App will complete the message.

Let’s go a little deeper – once you save a Logic App; you will have a workflow definition (see also the previous paragraph about the DSL). The definition is saved on the Azure Platform (that is on some server in an Azure Datacenter), and the Logic App Resource Provider (RP) can interpret that definition. This resource provider is one of the components of the Logic App service, and its responsibility is to read the workflow definition and break that down into a composition of tasks with dependencies. Next, it will store that into the backend (Azure). Subsequently, the engine (Logic App Runtime) will pick up the tasks and do the following:

- There is a triggered task – in our example, it is a Service Bus trigger. Hence the engine will monitor the service bus for new messages based upon the set interval. Note that the service bus trigger has long polling, so if you never need to poll on it, don’t poll on it for any more than 30 seconds because we long poll for 30 seconds.

- Once a message comes into Service Bus, so one new message, it will go ahead and tell the Workflow Orchestrator – for this workflow definition, a new instance should be created.

- Next, the Workflow Orchestrator will see in the definition that the first action is a ForEach and the ForEach will receive an array (collection) of objects.

- The ForEach runs in parallel (by default in batches of 20), and you can configure that in the portal (see also Serverless Notes tip – Improve Performance Using Parallelism).

- The SQL Action will run in parallel and keeps inserting until the whole collection is done.

- Then the Workflow Orchestrator receives a notification that the ForEach is done.

- Next, the Workflow Orchestrator will see in the definition what the next step is “Complete Message”.

- Lastly, the Service Bus action will send complete the message to the Service Bus.

Note that the execution of the workflow is all tasks in the background – there is no compiled code. The Resource Provider interprets the JSON Workflow definition and creates different tasks in our backend to get executed by the Logic App Runtime.

The Task Resiliency

Logic App service has a highly resilient task processor with no active thread management

The tasks are distinct jobs that get distributed across a lot of nodes that are running in the backend. Furthermore, since there’s no active thread management, your Logic App runs (instances) can exist in parallel across all these machines.

Since running tasks, it is not limited to a single node; there is better resiliency for them. In case a node fails, there are still others running, and thus handling transient failures becomes easy. The Logic App Runtime (Workflow Orchestrator) will monitor tasks that have been done, and if one is not complete – reschedule that task to get completed. Moreover, the engine ensures that there is at least a one-time execution.

To conclude in case, a task doesn’t respond the Workflow Orchestrator will go ahead and assign a new job (as stated earlier, there is at least once guaranteed execution). In the cloud world, for consistency, you will need to think about eventual consistency, idempotency, and at least one execution. Those things go together to our new cloud world!

Furthermore, Microsoft has built-in retry policies so that if there are any transient failures, there will be a retry (see also Serverless Notes – Configure Retry Settings). Note that most of the Logic Apps parts (action, triggers) are API based on communicating with different endpoints. Hence, network blips, Authorization failures, and so on can be retried (by default there is a retry policy – four retries at exponentially increasing intervals).

Recap

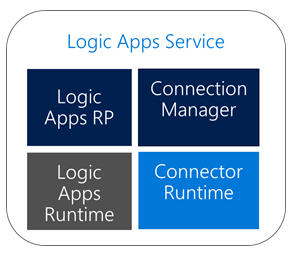

So far, we have discussed the designer, the DSL, and the execution of a Logic App under the hood (tasks). But what about the Logic Apps service in general – it consists of four general components. There is a Logic Apps RP, which is a Resource Provider – essentially the Logic App frontend handling all the requests (also discussed in the execution of Logic App paragraph). This component reads the workflow definition and breaks it down into component tasks with dependencies and then puts that into storage, which then the backend will process it.

The Logic Apps Runtime is a distributed compute that will go ahead and coordinate those tasks that have been broken down from your logic app. Then we have a Connection Manager that goes forward and manages the connection configuration, token refreshment, and credentials that you have in your API connections. And then finally the Connector Runtime, that hosts the API abstractions to all the APIs that you have and hosts– sometimes are codeful, sometimes they’re codeless, and then manages that abstraction for you.

Conclusion

That’s it – hope this blog post provides a better understanding of the designer, the Logic App Service components, and execution of a Logic App instance.